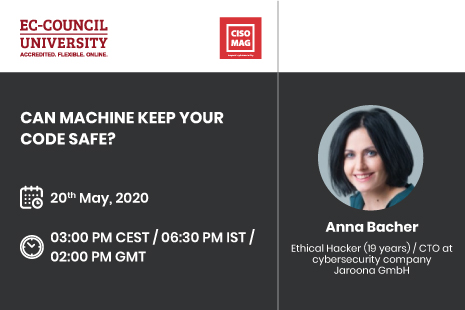

Speaker Anna Bacher,

Designation: Ethical Hacker (19 years) / CTO at cybersecurity company Jaroona GmbH

Topic: Can machine keep your code safe?

Date of Webinar: 20th May, 2020

Time and Location: 03:00 pm CEST/ 06:30 pm IST/ 02:00 pm GMT

Anna Bacher is co-founder and CTO of Jaroona GmbH, the world’s most advanced machine-learning-based enterprise application security platform. She is an Ethical Hacker, CTO, and developer with nineteen years of experience in developing and penetrating complex systems for the top 100 international banks, telecom companies, and payment processors. Anna is a co-author of an important patent for peer-to-peer network security. Throughout her career as a security architect, she has been an innovator in the fields of network security, end-point protection, security analytics, machine learning, and threat defense portfolios.

In her current position at Jaroona, she manages a team of highly skilled professionals in cybersecurity, ethical hacking, and deep learning, focusing on creating developers’ tools for source code protection. They have built an AI-based vulnerability detection and repair tool that alerts developers to critical vulnerabilities in their code while they are writing it and provides automated fix suggestions.

Topic Abstract:

Attackers weaponize AI.

Since the early 1990s, every major machine learning open-source release has been closely followed by new attacker toolkits that add obfuscation and anti-decoding to avoid detection, polymorphism to evade antivirus tools, and deliver extremely volatile and dynamic payloads to avoid detection by network intrusion systems. Some attacker toolkits can detect a user‘s environment and deliver specific and targeted exploits. We live in an era of weaponized AI.

It’s time for developers to catch up with attackers and utilize AI to protect their applications. The best protection starts by writing secure code without vulnerabilities that can be exploited later.

Easier said than done.

Responsible programmers fail to implement secure coding practices for a variety of reasons. Not all programmers have the necessary knowledge of secure coding standards. And many of the existing code analysis tools are unwieldy and ineffective, with poor performance and major usability issues.

Developers need help.

This webinar demonstrates how my research team, leveraging the latest advances in NLP (Natural Language Processing), has trained a machine to write fixes and security patches for potential vulnerabilities in source code with high precision and confidence.

The system mines a codebase of over 15 million Java and C/C++ files, creating a knowledge base that provides fixes for more than 170 categories of vulnerabilities.

Traditionally, NLP research has focused on language translation, text generation, sentence completion, grammar correction, and question responses. The first applications of NLP research to programming languages focused on code auto-completion occurred in 2019. My team is among the first to utilize NLP research and architectures in the field of automatic security patch generation.

As part of this webinar, I will share my view as an ethical hacker on whether we can trust a machine’s auto-generated security patches to be legitimate. How can we be sure that the machine is not planting a vulnerability in our code? Just as an AI or ML can be programmed to assist with cybersecurity, one could also be programmed to perform cyberattacks and never get caught.

At the end of this session, I will address the concerns voiced by many prominent high-tech entrepreneurs and researchers regarding the dangers to human society posed by AI. “As AI gets much smarter than humans, the relative intelligence ratio is probably similar to that between a person and a cat, maybe bigger,” Elon Musk told technology journalist Kara Swisher. “I do think we need to be very careful about the advancement of AI.”

As AI is applied to cybersecurity, at some point, we will build and train machines that are so much more competent than we are that the slightest divergence between their goals and our own could convert them into black hat hackers.

As we contemplate creating AI that can make changes to itself, it seems that we will only have one chance to get the initial conditions right. And that time is now.

*Examples, analysis, views and opinion shared by the speakers are personal and not endorsed by EC-Council or their respective employer(s)